Part 1; Prepping the image and using ECR

Recently, I wanted to take a look at turning my website into a cloud native application;

designed to meet cloud-like demands of auto-scaling, self-healing, rolling updates, rollbacks and more.

I also wanted to use it as an opportunity to take a look at Amazon Web Services (AWS) offering to achieve this.

The website I am working with is static content. There isn’t much else to it besides some HTML and CSS.

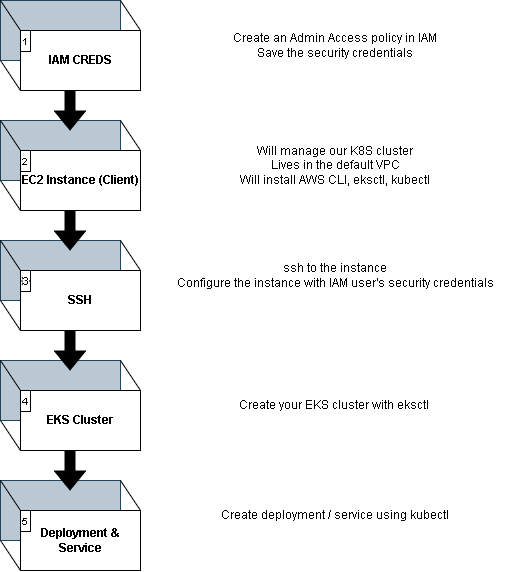

Since we’re running on an EC2 instance right now, we need to containerize our site. Here is an overview of what our process will look like.

The image below gives an overview of the actions involved with migrating our website to a container, and then pushing to the ECR Repo.

This image paints a broad picture of what is needed to transform our basic HTML site to a container and get it shipped to ECR. Let's jump in!

To set up the HTML for our site, I have some dummy code below. You can use this, or feel free to use your own:

<!DOCTYPE html>

<html>

<body>

<h1>My First Heading</h1>

<p>My first paragraph.</p>

</body>

</html>

1. IAM Creation

Now we will create the IAM credentials/user. For the IAM user, navigate to the security credentials interface. Create a user with Administrator Access. Once this is done, save the security credentials in a secure location. You’ll need the Access Key and Secret Key moving forward when we perform the AWS CLI config.

2. Launch EC2 Client

Launch your EC2 instance. For my setup I chose the Amazon Linux EC2 instance. The official AMI is:

“al2023-ami-2023.4.20240401.1-kernel-6.1-x86_64”

After the EC2 instance is provisioned we need to update, install docker, start the service, and verify AWS CLI presence. You’ll use these when you SSH to your instance. Below are the commands to do so. You can make a script like the one below or do it live on the CLI:

#!/bin/bash

sudo yum update -y

sudo amazon-linux-extras install docker

sudo yum install -y docker

sudo service docker start

sudo systemctl enable docker

sudo usermod -a -G docker ec2-user

Now that docker is installed, check if the CLI is present with the “aws --version” command. If for some reason it is not (maybe you used a different AMI?) then do:

#!/bin/bash

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo yum update -y

sudo yum install unzip -y

unzip awscliv2.zip

sudo ./aws/install

3. SSH to EC2 Instance

Remember how we ssh to our instance? It is fairly straight forward on Linux or Git Bash for Windows. We're assuming you stuck with the AMI recommended earlier; if not then replace ec2-user with the appropriate user for the key:

ssh -i your.pem ec2-user@EC2-IP

Now, run your installs for docker and if needed AWS CLI. You can check them with the following commands:

docker --version

aws --version

Use the IAM credentials from step 1 to configure the instance on the EC2 CLI. You’ll need your key values, region, and the output type you want to use:

aws configure

4. Create the HTML content

Let's now create the website's static HTML. Use the HTML from above, or make your own. Either way do the following:

vim index.html

"PASTE HTML in the index.html file"

:wq (write and exit vim)

5. Create the Dockerfile

5. Now, let’s create the Dockerfile and add the content to the file. Add the Dockerfile in your website DocumentRoot, or the root content directory:

vim Dockerfile

Add the Dockerfile content (with Comments). I am going to using nginx:alpine because it is lightweight and achieves our needs:

#Nginx base image

FROM nginx:alpine

#Copy the website files to the nginx html directory

COPY . /usr/share/nginx/html

#Expose port 80 and 443

EXPOSE 80

EXPOSE 443

#Start nginx when the container launches

CMD ["nginx", "-g", "daemon off;"]

6. Create the Docker image

Let’s create the image using the docker daemon. Create the image with the following syntax, where "myimagename" is interchangeable. Make sure you’re in the same directory as your Dockerfile. Here is the command to build the image:

docker build -t myimagename .

Check that your image is created with the following docker command:

docker image ls

7. Test the Container

Create the docker container using the image we created above. Use the following docker command:

docker run -d -p 80:80 myimagename

NGINX will listen for in and outbound connections to port 80, or the default web port. If your security groups and firewall allow it, you can navigate to your IP address in your browser and see the application. Give it a try and make sure it is working before we push to ECR.

8. Create ECR Repo

Once you verify that your container is working and the site is viewable, then we can push to ECR. First we will create a repo in AWS ECR. Navigate to the AWS ECR page, and click the create repositories button.

You can choose the settings you want for your repo, but typically I use a least privilege model, so I set my repo to private. If you want a public repo that is fine, but it will be available to others, so use caution.

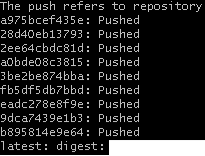

9. Push Image to ECR Repo

Almost there! Let’s push our image to the ECR repo we just created. In order to do this click on the ECR repo that you just created. Good, now click on the “View Push Commands” button. There are 4 steps within this interface, which consists of the following:

a. Retrieve an authentication token and authenticate your Docker client to the registry via the CLI

b. Build the docker image (We already did this!)

c. Tag your image so you can push

d. Push the image to the new repo.

Good job, now if everything went alright you should see an output similar to the following:

We can also navigate back to our AWS ECR web interface and see that the new image is available.

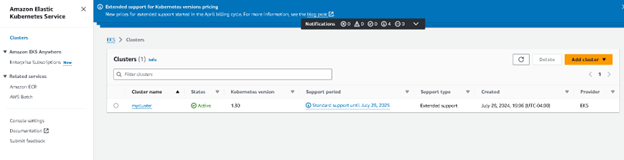

In the next part we will use this image along with Elastic Kubernetes Service (EKS) to deploy our scaled application.

We can also navigate back to our AWS ECR web interface and see that the new image is available.

In the next part we will use this image along with Elastic Kubernetes Service (EKS) to deploy our scaled application.